Below is a synopsis of the book that I wrote with my co-author, Phaedra Boinodiris . This is an...

Trending in Education — SXSW EDU 2024

To the best accomplice I have found in promoting what we are doing at Bast — thank you, Mike Palmer for this fantastic experience of being on his podcast, in real life, during SXSW EDU. Stepping onstage with such a unique and thoughtful crew was one of the highlights of my time in Austin. Even though I am an introvert and need my recovery time, in-person is how I prefer to do these things. Human factors matter.

This conversation is RELEVANT to all people, and I wanted to give attribution and note some of my favorite things:

Ancestral Intelligence — artificial implies that something can come from nothing, and I’m afraid that’s not right. It’s about our ancestral understanding and diversification of inputs to be inclusive of everyone, akin to the 51-ish archaeologists who have written and depicted human history. We need more people to provide input to our Ancestral Intelligence. Thank you, Adam Starcaster, for this lovely train of thought.

AI that shows its work — explainable AI that can explain itself increases adoption — human-centered AI — Bast AI. Below is our poster for our session.

Mental Models — by the stunning Dr. Robin Naughton. This is definitely the best trend and is # 1 on our list. It is incredible to think about how our views and mental models can be understood. How do we map out mental models? How do we draw that out? Be conscious about mental models in DEI? Robin gives the best contrast for this thought problem — moving from an ally to an accomplice.

Using the contrast between our understanding of ally to accomplice and how our mental model of ally is a friend. In comparison, think about how our “social” mental model of an accomplice is related to being a criminal. To many of us, the difference is passive-ally-friend vs active-accomplice-conspirator. I want to be an accomplice to help unrepresentative or nondominant groups be seen or understood. THIS contrast is something I will take with me into all my talks and spread wide and far.

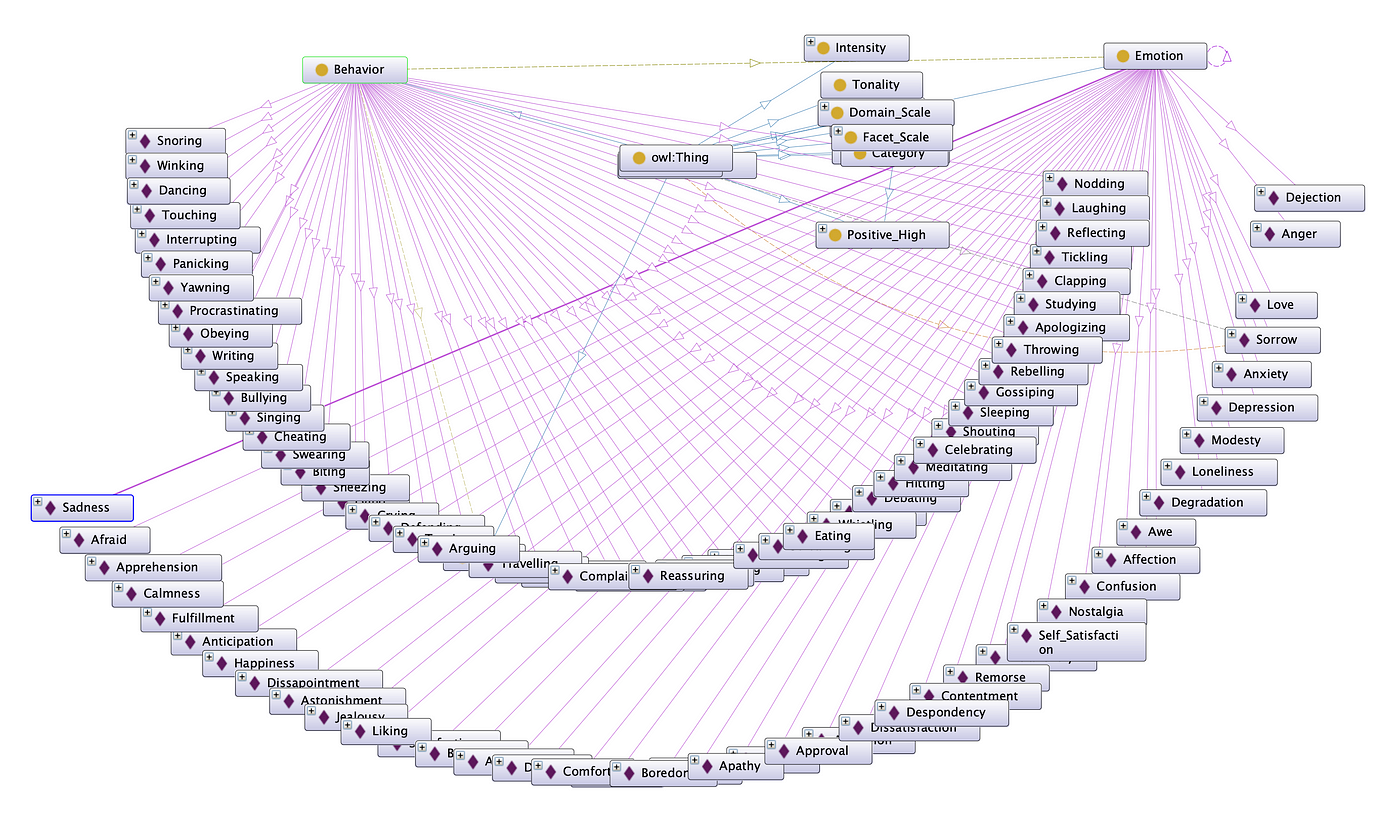

The work we do in Bast AI involves creating visual mental models and knowledge graphs or ontologies of the language used by a human being to describe their mental models. This allows people to increase their awareness of how other people see the world. Below is a visual mental model of emotions and behaviors based on the Myers Briggs Type Indicator (MBTI) — a way to give people vocabulary about how they may behave and feel.

Enshittifications and Premium vs non-premium. The older models are better and more widely used, whereas the newer models already show the regurgitation of AI-generated text. Premium vs. non-premium is exasperating the socio-economic and access gap. The gap between the haves and have-nots is amplified by what happened with the pandemic.

Melissa Griffith — calls all AI Fred and has honed her interrogatory muscle on the back of ChatGPT, Claude, and Perplexity. She asks the most fabulous questions and talks about hyper-personalization. She challenges us to think about the fact that the information is out there, and AI allows humans to synthesize it — how can we use it effectively?

Doing AI for real is HARD and requires many more roles than people understand, especially at scale in an Enterprise. How do we do AI with consent? How did we grow an entire generation of humans who thought the internet content was free for their commercial use? How do we credit the authors with citations? When GenAI creates wrong citations, do students have the proper critical thinking skills to discern that citations are incorrect? What is AI good for? How do we integrate it into learning and teaching?

“AI is great if you are already wise” — my favorite quote from the amazing Erin Schnabel. If you are already wise — you know the right questions and are discerning. The language models are built to generate and stitch together text; they find patterns in text and sew them together. Analogies, metaphors, scenario questions, etc. — We have a generational gap among those who value attribution. We (who are already wise) know that understanding takes work — “Understanding is not an act. It is a labor.” Therefore, attribution feels good and righteous because we have done the work. We can take what we love from the past our from our people and remix it to make it relevant. We need to teach this work or labor to understand and give people the feeling of doing something good by doing the work to provide the attribution. This feeling drove me to write this article, modeling the message.

We can and should choose to only interact with AI that “models” what we value as human beings. We have choices, and just like our great teachers in nature, we should look for AI systems that model what we value. I am a massive fan of using biomimicry, which can help us learn how to value the ecosystem to which we all belong. There is so much to learn in Janine Benyus’s book on learning from nature.

Analog interaction, in addition to AI technology — Dr. Robin Naughton — do human things in terms of collaboration and communication — Like being on a podcast in person :) It is still a necessity.

These are words to live by and how to ensure humans are aligned with the AI created from our data, aka the artifacts of our experiences.

“Everyone involved in creating an AI system, including the companies that sponsor it, is accountable for that system’s impact on the world.”

Here is the complete reference from the ideas and thoughts from our show.